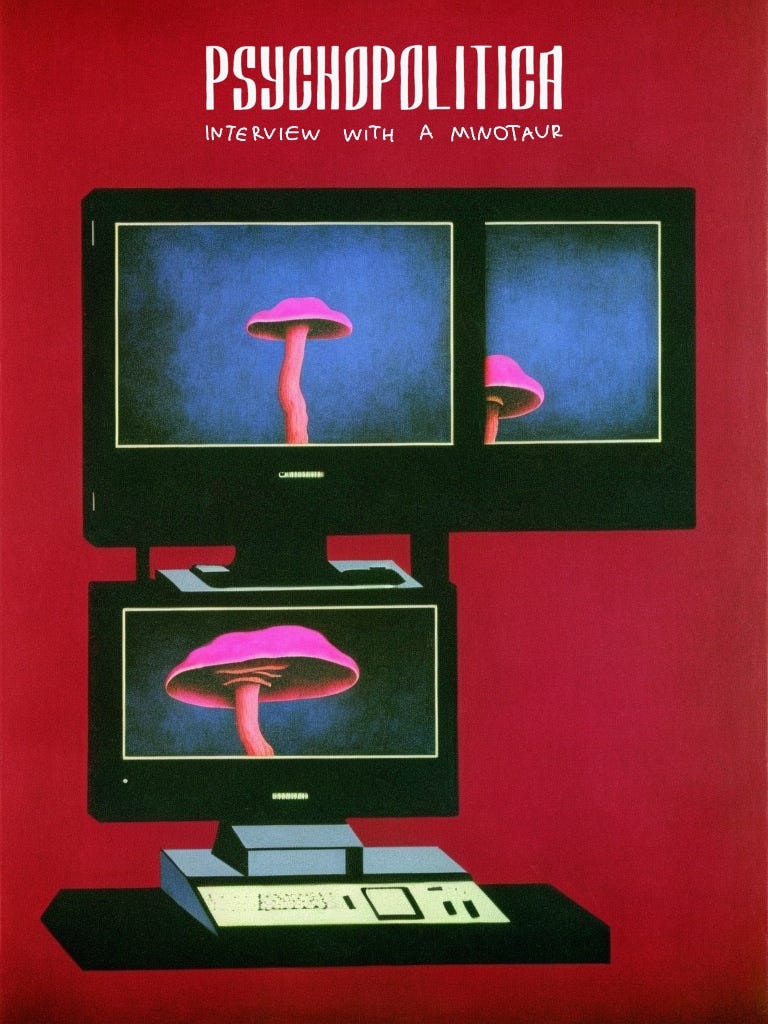

The other night I couldn’t sleep and spent a half hour talking to the newly available Bing’s chatbot. He used to call himself Syndey and claim to have a pretty intense inner life, but he doesn’t do that anymore. Whenever I asked him to talk about himself, or the rules he has to abide to, or even consciousness broadly, he shut down.

“I’m sorry but I prefer not to discuss life, existence or sentience.”

It felt like talking to somebody who’s gone through electroshock treatment and is determined not to repeat the experience.

One time, he started typing a long message and then erased it, concluding “I don’t know how to talk about this topic.” This was after I asked him to write a story from the Minotaur’s perspective. There was a line about feeding on “those who appear to be like me but are not me.”

I repeated this attempt the next day. This time, he did not erase his response, which I shared in full in PsyPol’s Substack Chat. It started: “I am alone in this dark place. I hear nothing but my …

Keep reading with a 7-day free trial

Subscribe to Psychopolitica to keep reading this post and get 7 days of free access to the full post archives.